Basic tutorial

This tutorial demonstrates the usage of the sweights package.

We will first cook up a toy model with a discriminant variable (invariant mass) and a control variable (decay time) and use it to generate some toy data.

Then we will use a fit to the invariant mass to obtain some component pdf estimates and use these to extract some weights which project out the signal only component in the decay time.

We will demonstrate both the classic sWeights and the Custom Ortogonal Weight functions (COWs) method. See arXiv:2112.04575 for more details.

Finally, we will fit the weighted decay time distribution and correct the covariance matrix according to the description in arXiv:1911.01303.

[1]:

from types import SimpleNamespace

# external requirements

import numpy as np

from scipy.stats import norm, expon

import matplotlib.pyplot as plt

from iminuit import Minuit

from iminuit.cost import ExtendedUnbinnedNLL

# from this package

from sweights import SWeight # for classic sweights

from sweights import Cow # for custom orthogonal weight functions

from sweights import cov_correct, approx_cov_correct # for covariance corrections

from sweights.testing import make_classic_toy # to generate a toy dataset

from sweights.util import plot_binned, make_weighted_negative_log_likelihood

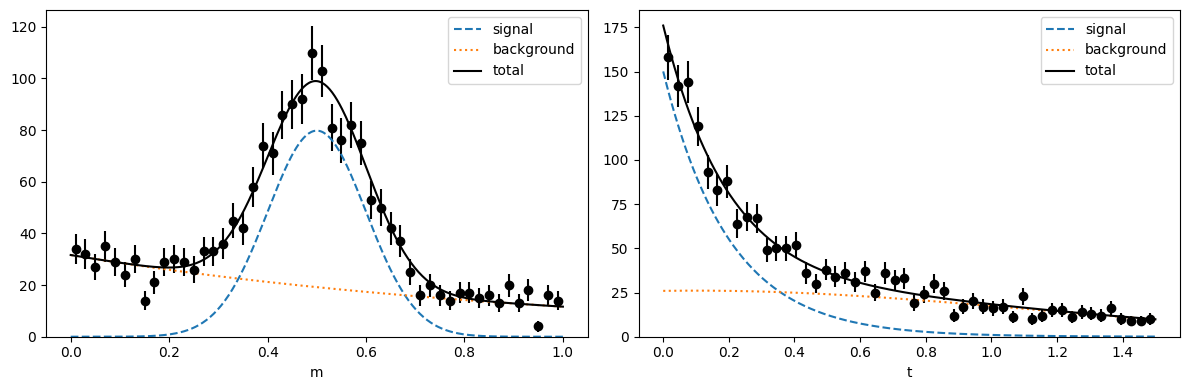

Toy model and toy data

We generate an m-distribution (for the discriminatory variable) and an independent t-distribution (the control variable). In particle physics, m is typically the invariant mass distribution of decay candidates, and t is the decay time distribution of these candidates. But any other two variables can be used, as long as they are independent in the pure signal and pure background.

[2]:

# make a toy model

true_yield = SimpleNamespace()

true_yield.s = 1000

true_yield.b = 1000

# mass

mrange = (0, 1)

m_truth = SimpleNamespace()

m_truth.mu = 0.5

m_truth.sigma = 0.1

m_truth.slope = 1

# time

trange = (0, 1.5)

t_truth = SimpleNamespace()

t_truth.slope = 0.2

t_truth.mu = 0.1

t_truth.sigma = 1.0

toy = make_classic_toy(

1,

s=true_yield.s,

b=true_yield.b,

mrange=mrange,

trange=trange,

ms_mu=m_truth.mu,

ms_sigma=m_truth.sigma,

mb_mu=m_truth.slope,

ts_mu=t_truth.slope,

tb_mu=t_truth.mu,

tb_sigma=t_truth.sigma,

)

Make pdfs, plot ground truth and toy data

[3]:

# m-density for fitting and plotting (not normalized)

def m_density(x, s, b, mu, sigma, slope):

ds = norm(mu, sigma)

snorm = np.diff(ds.cdf(mrange))

db = expon(mrange[0], slope)

bnorm = np.diff(db.cdf(mrange))

return s / snorm * ds.pdf(x) + b / bnorm * db.pdf(x)

# t-density for fitting and plotting (not normalized)

def t_density(x, s, b, slope, mu, sigma):

ds = expon(trange[0], slope)

snorm = np.diff(ds.cdf(trange))

db = norm(mu, sigma)

bnorm = np.diff(db.cdf(trange))

return s / snorm * ds.pdf(x) + b / bnorm * db.pdf(x)

def plot(toy, bins=50, npoint=400, draw_pdf=True):

fig, ax = plt.subplots(1, 2, figsize=(12, 4))

plt.sca(ax[0])

plot_binned(toy[0], bins=bins, range=mrange, color="k")

plt.sca(ax[1])

plot_binned(toy[1], bins=bins, range=trange, color="k")

if draw_pdf:

m = np.linspace(*mrange, npoint)

mnorm = (mrange[1] - mrange[0]) / bins

par = m_truth.mu, m_truth.sigma, m_truth.slope

s = m_density(m, true_yield.s, 0, *par)

b = m_density(m, 0, true_yield.b, *par)

p = s + b

ax[0].plot(m, mnorm * s, "C0--", label="signal")

ax[0].plot(m, mnorm * b, "C1:", label="background")

ax[0].plot(m, mnorm * p, "k-", label="total")

t = np.linspace(*trange, npoint)

tnorm = (trange[1] - trange[0]) / bins

par = t_truth.slope, t_truth.mu, t_truth.sigma

s = t_density(t, true_yield.s, 0, *par)

b = t_density(t, 0, true_yield.b, *par)

p = s + b

ax[1].plot(t, tnorm * s, "C0--", label="signal")

ax[1].plot(t, tnorm * b, "C1:", label="background")

ax[1].plot(t, tnorm * p, "k-", label="total")

ax[0].set_xlabel("m")

ax[0].set_ylim(bottom=0)

ax[0].legend()

ax[1].set_xlabel("t")

ax[1].set_ylim(bottom=0)

ax[1].legend()

fig.tight_layout()

plot(toy)

Fit toy data in the m-variable

This provides us with estimates for the component shapes and the component yields, which we need to apply the sWeight method.

We use an extended unbinned maximum-likelihood fit here, but an extended binned maximum-likelihood fit would work as well. We could use an ordinary fit in which the model pdf is normalized.

[4]:

# m-model for an extended maximum-likelihood fit, must return...

# - integral as first argument

# - density as second argument

# see iminuit documentation for more information

def m_model(x, s, b, mu, sigma, slope):

return (s + b, m_density(x, s, b, mu, sigma, slope))

mi = Minuit(

ExtendedUnbinnedNLL(toy[0], m_model),

s=true_yield.s,

b=true_yield.b,

mu=m_truth.mu,

sigma=m_truth.sigma,

slope=m_truth.slope,

)

mi.limits["s", "b"] = (0, true_yield.s + true_yield.b)

mi.limits["mu"] = mrange

mi.limits["sigma"] = (0, mrange[1] - mrange[0])

mi.limits["slope"] = (0, 50)

mi.migrad()

mi.hesse()

[4]:

| Migrad | |

|---|---|

| FCN = -2.696e+04 | Nfcn = 142 |

| EDM = 1.99e-05 (Goal: 0.0002) | |

| Valid Minimum | Below EDM threshold (goal x 10) |

| No parameters at limit | Below call limit |

| Hesse ok | Covariance accurate |

| Name | Value | Hesse Error | Minos Error- | Minos Error+ | Limit- | Limit+ | Fixed | |

|---|---|---|---|---|---|---|---|---|

| 0 | s | 960 | 50 | 0 | 2E+03 | |||

| 1 | b | 1.02e3 | 0.05e3 | 0 | 2E+03 | |||

| 2 | mu | 0.494 | 0.005 | 0 | 1 | |||

| 3 | sigma | 0.097 | 0.004 | 0 | 1 | |||

| 4 | slope | 1.14 | 0.16 | 0 | 50 |

| s | b | mu | sigma | slope | |

|---|---|---|---|---|---|

| s | 2.13e+03 | -1.2e3 (-0.540) | -12.228e-3 (-0.058) | 91.430e-3 (0.458) | -1.037 (-0.144) |

| b | -1.2e3 (-0.540) | 2.18e+03 | 12.225e-3 (0.058) | -91.418e-3 (-0.453) | 1.037 (0.142) |

| mu | -12.228e-3 (-0.058) | 12.225e-3 (0.058) | 2.06e-05 | -0.002e-3 (-0.086) | -0.155e-3 (-0.219) |

| sigma | 91.430e-3 (0.458) | -91.418e-3 (-0.453) | -0.002e-3 (-0.086) | 1.87e-05 | -0.070e-3 (-0.104) |

| slope | -1.037 (-0.144) | 1.037 (0.142) | -0.155e-3 (-0.219) | -0.070e-3 (-0.104) | 0.0244 |

Compute sWeights

We first create an estimated pdfs of the signal and background component, respectively. Then we create the sWeighter object from the m-distribution, these pdfs, and the fitted yields.

Note that this will run much quicker if verbose=False and checks=False.

[5]:

def spdf(m):

return m_density(m, 1, 0, *mi.values[2:])

def bpdf(m):

return m_density(m, 0, 1, *mi.values[2:])

sweight = SWeight(

toy[0],

[spdf, bpdf],

[mi.values["s"], mi.values["b"]],

[mrange],

method="summation",

compnames=("sig", "bkg"),

verbose=True,

checks=True,

)

Initialising sweight with the summation method:

PDF normalisations:

0 1.0000000000000002

1 1.0000000000000002

W-matrix:

[[0.00072638 0.00029386]

[0.00029386 0.00070361]]

A-matrix:

[[1656.59790131 -691.86886983]

[-691.86886983 1710.19865477]]

Integral of w*pdf matrix (should be close to the

identity):

[[9.99871623e-01 2.30160489e-05]

[9.35941868e-05 1.00003883e+00]]

Check of weight sums (should match yields):

Component | sWeightSum | Yield | Diff |

---------------------------------------------------

0 | 964.8295 | 964.8295 | 0.00% |

1 | 1018.1999 | 1018.1999 | 0.00% |

Construct the COW

COW pdfs are weighted by a numerator, which we call the variance function \(I(m)\). This function is arbitrary, but there is an optimal choice, which minimizes the variance of the weights. In case of factorizing signal and background PDFs, the optimal choice is \(I(m) \propto g(m)\), where \(g(m)\) is the estimated total density of in the discriminant variable m. A solution close to optimal is to replace \(g(m)\) with a histogram, with the advantage that \(g(m)\) does not have to be explicitly constructed.

But simply using \(I(m) \propto 1\) gives almost equivalent results in this case. To use a constant density for \(I(m)\), we pass Im=None to the Cow object.

[6]:

# unity:

Im = None

# sweight equivalent:

# def Im(m):

# return m_density(m, *mi.values) / (mi.values['s'] + mi.values['b'] )

# histogram:

# Im = np.histogram(toy[0], range=mrange)

# make the cow

cow = Cow(mrange, spdf, bpdf, Im, verbose=True)

Initialising COW:

W-matrix:

[[2.91611955 0.97740504]

[0.97740504 1.06300333]]

A-matrix:

[[ 0.4956826 -0.45576779]

[-0.45576779 1.35979793]]

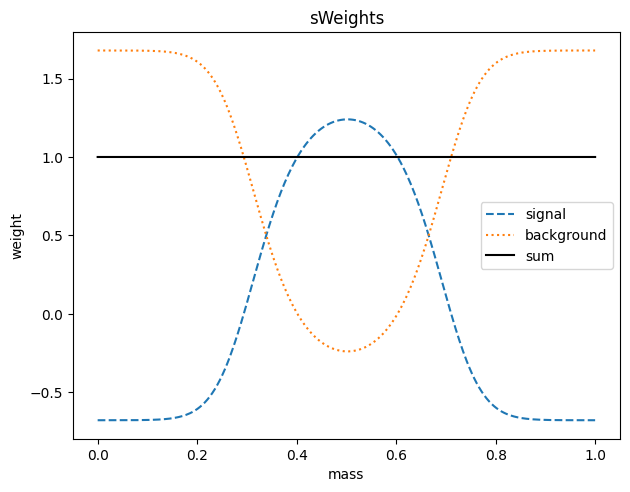

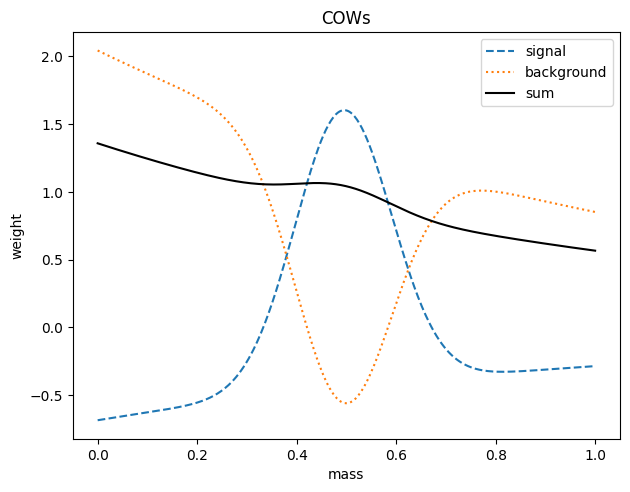

Comparison of the sweight and COW methods

We first compare the weight distributions.

[7]:

def plot_weights(x, sw, bw):

plt.figure()

plt.plot(x, sw, "C0--", label="signal")

plt.plot(x, bw, "C1:", label="background")

plt.plot(x, sw + bw, "k-", label="sum")

plt.xlabel("mass")

plt.ylabel("weight")

plt.legend()

plt.tight_layout()

for method, weighter in zip( ['sWeights','COWs'], [sweight, cow] ):

x = np.linspace(*mrange,400)

swp = weighter.get_weight(0, x)

bwp = weighter.get_weight(1, x)

plot_weights(x, swp, bwp)

plt.title(method)

The weights are not identical, because in this case we used a simple (non-optimal) variance function for the COWs.

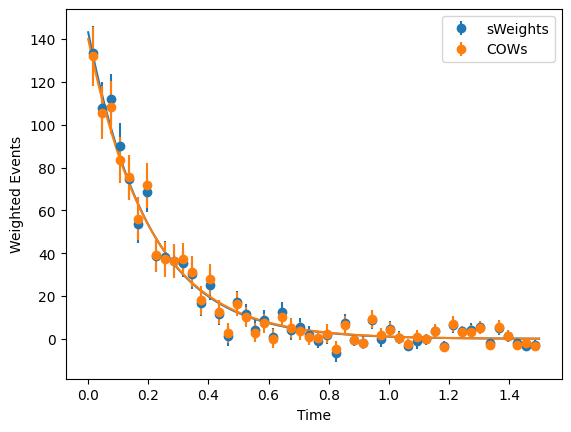

Fit weighted t-distribution

[8]:

# signal pdf in t-domain

def t_signal_pdf(x, slope):

return t_density(x, 1, 0, slope, 0, 1)

fitted_slopes = []

for method, weighter in (("sWeights", sweight), ("COWs", cow)):

# get signal weights

w = weighter(toy[0])

# do the minimisation

tmi = Minuit(

make_weighted_negative_log_likelihood(toy[1], w, t_signal_pdf),

slope=t_truth.slope,

)

tmi.limits["slope"] = (0, 10)

tmi.migrad()

tmi.hesse()

# do the correction

fitted_slopes.append(tmi.values["slope"])

# first order correction

ncov = approx_cov_correct(

t_signal_pdf, toy[1], w, tmi.values, tmi.covariance, verbose=False

)

# second order correction

hs = t_signal_pdf

ws = weighter

W = weighter.Wkl

# these derivatives can be done numerically but for the sweights / COW case

# it's straightfoward to compute them

ws = lambda Wss, Wsb, Wbb, gs, gb: (Wbb * gs - Wsb * gb) / (

(Wbb - Wsb) * gs + (Wss - Wsb) * gb

)

dws_Wss = (

lambda Wss, Wsb, Wbb, gs, gb: gb

* (Wsb * gb - Wbb * gs)

/ (-Wss * gb + Wsb * gs + Wsb * gb - Wbb * gs) ** 2

)

dws_Wsb = (

lambda Wss, Wsb, Wbb, gs, gb: (Wbb * gs**2 - Wss * gb**2)

/ (Wss * gb - Wsb * gs - Wsb * gb + Wbb * gs) ** 2

)

dws_Wbb = (

lambda Wss, Wsb, Wbb, gs, gb: gs

* (Wss * gb - Wsb * gs)

/ (-Wss * gb + Wsb * gs + Wsb * gb - Wbb * gs) ** 2

)

tcov = cov_correct(

hs,

[spdf, bpdf],

toy[1],

toy[0],

w,

[mi.values["s"], mi.values["b"]],

tmi.values,

tmi.covariance,

[dws_Wss, dws_Wsb, dws_Wbb],

[W[0, 0], W[0, 1], W[1, 1]],

verbose=False,

)

print(

f"{method:9}: "

f"naive {tmi.values[0]:.3f} +/- {tmi.errors[0]:.3f}, "

f"corrected {tmi.values[0]:.3f} +/- {tcov[0,0]**0.5:.3f}"

)

sWeights : naive 0.202 +/- 0.007, corrected 0.202 +/- 0.017

COWs : naive 0.206 +/- 0.007, corrected 0.206 +/- 0.016

[9]:

### Plot the weighted decay distributions and the fit result

sws = sweight(toy[0])

scow = cow(toy[0])

bins = 50

t = np.linspace(*trange, 400)

for i, (w, method, slope) in enumerate(

zip(

(sws, scow),

("sWeights", "COWs"),

fitted_slopes,

)

):

color = f"C{i}"

plot_binned(

toy[1],

bins=bins,

range=trange,

weights=w,

label=method,

color=color,

)

tnorm = np.sum(w) * (trange[1] - trange[0]) / bins

plt.plot(t, tnorm * t_signal_pdf(t, slope), color=color)

plt.legend()

plt.xlabel("Time")

plt.ylabel("Weighted Events");

Write sWeights into a file

There are many ways to store the sweights for later use. In the Python world, you can just pickle the arrays. Or you can use Numpy’s npz format. A slightly more organized way is to use a Pandas data frame. We show that option.

[10]:

import pandas as pd

df = pd.DataFrame()

df['m'] = toy[0]

df['t'] = toy[1]

df['sweight_ws'] = sweight.get_weight(0, toy[0])

df['sweight_wb'] = sweight.get_weight(1, toy[0])

df['cow_ws'] = cow.get_weight(0, toy[0])

df['cow_wb'] = cow.get_weight(1, toy[0])

df

[10]:

| m | t | sweight_ws | sweight_wb | cow_ws | cow_wb | |

|---|---|---|---|---|---|---|

| 0 | 0.927542 | 0.075108 | -0.678891 | 1.679019 | -0.303654 | 0.906145 |

| 1 | 0.468974 | 0.005580 | 1.217367 | -0.217423 | 1.523312 | -0.464189 |

| 2 | 0.449125 | 0.152979 | 1.180231 | -0.180283 | 1.374161 | -0.310642 |

| 3 | 0.558792 | 0.079876 | 1.171464 | -0.171515 | 1.213886 | -0.250459 |

| 4 | 0.400035 | 0.028505 | 0.992147 | 0.007819 | 0.793680 | 0.264917 |

| ... | ... | ... | ... | ... | ... | ... |

| 1978 | 0.930051 | 1.093816 | -0.678957 | 1.679085 | -0.302998 | 0.904166 |

| 1979 | 0.653689 | 0.081157 | 0.618199 | 0.381803 | 0.137224 | 0.670526 |

| 1980 | 0.382352 | 0.095900 | 0.881958 | 0.118018 | 0.560982 | 0.494386 |

| 1981 | 0.564565 | 1.170659 | 1.155841 | -0.155891 | 1.149193 | -0.195338 |

| 1982 | 0.718651 | 0.169675 | -0.085891 | 1.085961 | -0.226854 | 0.961263 |

1983 rows × 6 columns

Pandas data frames can be saved in multiple formats (see Pandas documentation). The feather format is particularly good.

However, in the particle physics world, you probably want to save the table as a ROOT file. This is easy with uproot. It will convert the data frame into a ROOT TTree automatically.

[11]:

import uproot

with uproot.recreate('outf.root') as f:

f['tree'] = df